Space Shuttle Problems: Long-term Planning Amid Changing Technology

January 28th, 2016

Many enterprises are hoping to systematically drive competitive advantage and operational efficiency by gaining insight from data. For that reason, they may be contemplating — or already well into — a “data lake” effort. These are large, complex efforts, typically focussed on integrating vast enterprise data assets into a consolidated platform that makes it easier to surface insight across operational silos. Data lake programs are typically multi-year endeavors, and companies must embark on those efforts amidst a rapidly changing technology landscape, while simultaneously navigating highly dynamic business climates.

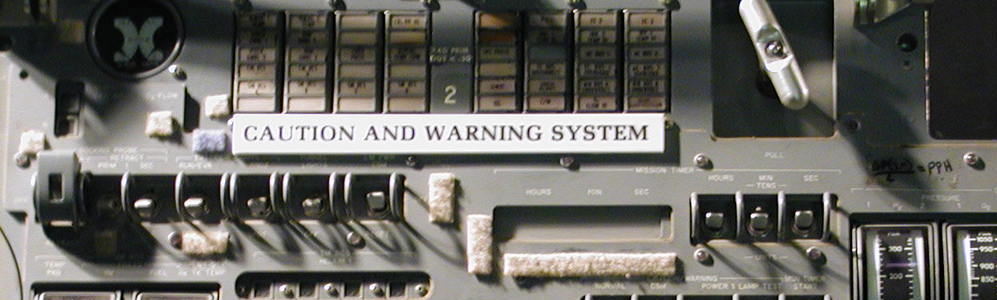

Such programs are replete with what we call space shuttle problems: your spaceship is going to launch in 10 or 12 years, so whatever processors you have today are going to seem quaint by the time you fly. The challenge is to find a way to take advantage of technical advances that will inevitably occur throughout a long, complex effort effort, without halting progress.

The attempt to “future proof” designs leads many organizations into analysis paralysis. Agile, iterative problem-solving approaches can help you avoid that paralysis. They are designed to embrace a changing scope and to be flexible enough to take advantage of unanticipated opportunities. There are limits to this approach, however. I often quip that while agile is great for many things, you can’t wait until you are in space to figure out how to breathe. Some aspects of a solution are so critical that there is little point to building anything else before you know you can solve that part of the overall problem.

How can you manage your implementation in a way that allows you to take maximum advantage of technology innovation as you go, rather than having to freeze your view of technology to today’s state and design something that will be outdated when it launches? You must start by deciding which pieces are necessary now, and which can wait.

Planning is Essential

With a space shuttle, there are components you simply don’t have the freedom to wait for. You need to design the control systems. You have to accept that by the time they are implemented and tested sufficiently to send a lucky few astronauts into space (and hopefully not kill them), you will be running on outdated equipment because you are optimizing your approach for reliability, not performance efficiency.

Beyond components with extreme reliability requirements, there are other aspects of a solution you want to make sure you tackle very early in a project. Let’s say the value proposition for the data lake effort included gaining a lot of value from using natural language processing techniques (NLP) on unstructured data. On the one hand, NLP is an area of rapid innovation. You might benefit from that innovation if you wait until you’ve loaded and integrated all of your unstructured data into the lake before choosing your NLP approach. However, if the NLP approach is unsuccessful, the time and expense of loading that data, scaling the infrastructure, learning how to use the NLP tool, etc., are potentially wasted. Because NLP is rapidly evolving, determining its utility for a given use case typically requires experimentation for that specific purpose. This is exactly when you want to conduct prototyping exercises early in the project.

The corollary to front-loading efforts to prove out critical, but uncertain aspects of a solution is to back-load efforts to implement well understood aspects of the solution. Why rush into implementing a user interface? It might be years before the project uses it and who knows what innovations happen in that time. There is little to no risk in waiting, and if the project runs into any major roadblocks there’s no wasted effort.

Space Shuttle Architecture

Beyond careful staging of how you go after your project’s backlog, there are architectural approaches you can take advantage of to help take advantage of innovation. Abstracting technology choices, so there is no unnecessary tight coupling of those choices, can make it much easier to change your mind or take advantage of the latest and greatest down the road.

For example, it used to be common to make data requests directly to an underlying database from a user application. This is the simplest and most efficient approach. However, at Silicon Valley Data Science (SVDS) we avoid this approach at all costs. By implementing a service layer between the application and the database, the application architecture is no longer tightly coupled to the database selection. You can change your database from Oracle to Postgres, or from MySQL to Cassandra, and your application is none the wiser. This is how we can isolate technology choices and support very complex, evolving application architectures without them breaking every time someone changes something.

Amazon has used this services approach to dramatic effect. They are able to support a dizzying array of products, warehouses, supply chains, partner vendors, and service offerings successfully, with several aspects of the entire system in flux at any given time. It’s probably the only way possible to integrate tens of thousands of partner inventory systems into what seems like a single store. Could you imagine if Amazon had to update its systems every time one of its small vendor partners updated their inventory system?

Next Steps

The idea of the space shuttle problem is to help put into perspective how we execute technology-driven projects in a rapidly changing (and improving) technology landscape. The goal is identifying and reducing our exposure to risk while increasing our understanding of the compromises involved with technology decisions, taking maximal advantage of innovation as we go. In some cases, the value gained by forgoing agility may be worth the consequences.

The point is to identify and front-load the poorly understood pieces of your project. Proper use of abstraction techniques can make changing your mind about those choices least expensive and easiest to implement—and that maximizes your ability to take advantage of technology innovation. Come to think of it, that’s probably the achievable version of “future proof.”